(#5) Mon Jan 24th, 2022 - Colocation

January 24, 2022•1,566 words

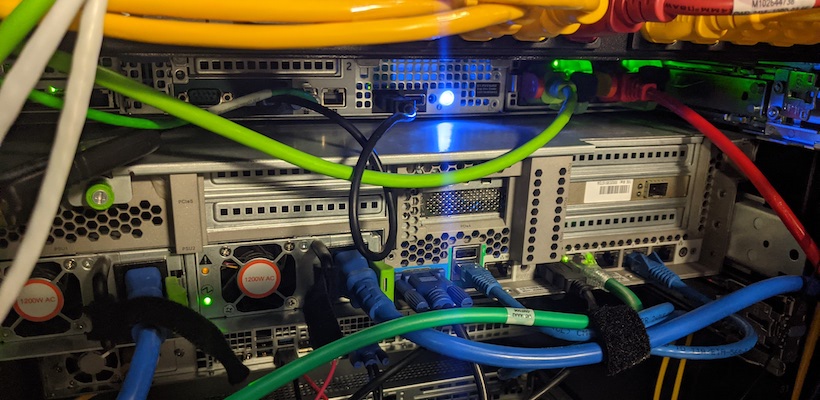

Looks like I missed a day. I was going to talk about something but I ended up falling asleep last night. The 100 day blogging challenge is over. I don't think a post a day is going to be sustainable for me while keeping the subject matter palatable and interesting for the reader. Today, though, we'll talk about setting up a colocation business since that's what I've been up to the past few weeks. My aim with these posts is to be transparent about operating an ISP, and to inspire others to do the same. The world will be better when everyone can access the internet. By learning, collaborating, and experiencing different mindsets, cultures, and perspectives, we can all become better to each other and ourselves.

Fitchburg Fiber is primarily a WISP (Wireless Internet Service Provider) that's branching into the fiber business slowly. I guess you could call it a hybrid FISP (Fiber ISP). Our biggest issue at the moment is plain and simple: money. The largest cost for a new ISP, most of the time, is an upstream internet connection. Whether you're getting some simple IP service from your local fiber provider or peering via BGP with a nearby global carrier, costs in the Northeast US have been trending towards $1500 per month for an unmetered symmetrical gigabit connection. If you're in a data center the costs are almost always much lower, but I'd wager most people starting ISPs don't have data centers in their neighborhood.

We use Cogent as our upstream and pay a hair over $1300 per month for a gigabit circuit. It brings us directly back to One Summer St. in the heart of Boston about 41 miles away as the crow flies. We're 5ms away from Cloudflare, AWS, and Facebook, and 6-10ms from Google and many others. Not bad! We peer with Cogent via BGP to announce our autonomous system (AS399134) to the public internet along with 256 public IP addresses (52.124.25.0/24) that we got at auction. Since we don't have many customers at the moment, a portion of our monthly recurring costs are being paid from capital we've personally injected into the business over the past years. As we grow, this amount will shrink and we will hit and surpass equilibrium, becoming profitable. Until then, we've got a question on our hands: how do we generate more revenue to stay afloat for the least amount of money possible? My answer: colocation.

To the colo customer that isn't a major tech company, reliability and access are trumped by one factor most of the time: cost. We're approaching the cost model in a straightforward way: $75 per rack-unit per month for a gigabit connection. Bring your own ASN and IPs or use one of ours. Simple right? Maybe not so much.

I've personally been a colo customer for a few years now and there's more to it (from the perspective of the provider) than just putting someone's server in your rack and giving them internet. If only it were that easy. There are a few major concerns in my mind with shared space colocation:

- Physical access to the equipment

- Monitoring bandwidth usage of customer ports

- Providing out of band management access

- Keeping everything online

To harp on the first one a bit, shared space colo is difficult and requires immense amounts of trust. All of your customers are renting space in a rack per U (rack unit). This means their servers may be racked up right above or below someone else's equipment. Ensuring the environmental conditions are favorable such that one server doesn't overheat the servers above and below it is critical. There's also literally nothing stopping a customer from strolling in and unplugging someone's stuff. The answers to this problem: legal contracts, audited tap card access, and TONS of cameras. Simple!

As a new ISP, we don't have infinite bandwidth to throw around like a larger provider might seem to. This means we have to be very conservative with what types of customers we seek out and engage with. We simply can't acquire a colo customer that's going to install 24U of servers and run an Alexa top 100 website generating gigabits per second of traffic. While that'd be nice, it'd require major upgrades to our infrastructure and upstream internet connection, both of which we can't afford at the moment. Likewise we can't let large scale crypto miners in that could potentially consume many thousands of kWh per month. Power isn't free and our plans are probably priced a bit too competitively for this use case. Because of this, we're only looking for smaller businesses, hobbyists, and prosumers that may need small hosting, offsite backup, or other always-online services like that.

Sticking to the tried and true 95th percentile bandwidth paradigm seems like a safe bet for us. Most customers are allowed 20Mbps of bandwidth at the 95th percentile. They still have connections capable of a gigabit burst, but if at the end of the month their 95th percentile traffic is over 20Mbps, we simply bill them $2 per Mbps they go over. This isn't as much of a deterrent as it may seem as most customers don't even come near 1Mbps after the month is over. It simply sets an expectation and it works well enough for us. Most of our bandwidth should be allocated to our traditional residential and business customers anyways. We are an ISP after all.

Out of band management access is another managed service altogether that's fairly easy to provide and proves itself invaluable when your customers lock themselves out of their pfSense routers by somehow deleting the WAN interface. In our case, it was simple to solve. We've already got a WireGuard gateway that allows us in-band access to our management network. From there we can access WinBox, SSH, and other services on routers, switches, and servers within our corporate infrastructure. For colo customers, we're simply providing them a WireGuard configuration that allows them access to a VLAN that all of their IPMI, iDRAC, iLo, ESXi management net, etc. are plugged in to. This way they're able to fix a lockout without having to go onsite.

But what if a server or other appliance completely locks up? We all hate that dreaded ESXi purple screen of death. To solve this we're also providing remote access to the APC PDU that their hardware is powered from. It's an AP8659NA3 if you're curious. Metered and switched per port, it allows us to keep track of customer power usage all the while allowing them to log in to switch their ports on and off again to power cycle locked up systems. This isn't necessary most of the time now that IPMI and the like are run on a separate SOC attached to the server's motherboard, but it's a nice option to have in an emergency.

The last point is a tough one to hit since power reliability is an expensive problem to solve when you aren't in a T1 data center. Most places in the US simply don't have the luxury of picking from multiple power providers. In our case it's just one, a utility company called Unitil. Even if we could get multiple feeds into the building, we're still at the mercy of an outage if a line goes down outside our building or if a transformer blows down the street. To plan for this contingency, we're investing in a 7kW natural gas powered generator with an automatic transfer switch for the two circuits that power our micro data center.

Since generators often take 10-20 seconds to spool up when the ATS decides the mains power has puttered out, we also need a large enough UPS battery backup array to hold us over for that short time until the generator takes over again. The UPS will also provide power conditioning for the incoming power that may or may not be properly phased from the tiny explosions going on upstream at our generator. We think this strategy will take us a few years into the future especially since we don't need to store liquid propane or natural gas on site. We can foreseeably power the entire operation for days or weeks at a time with the grid entirely out at very little relative cost. Compared to the cost of having customers offline we'd prefer the upfront cost of the equipment. That's the power of mains gas for you. While this may all seem a bit overkill since the last reported outage was over 5 years ago during a crazy blizzard, one can never be too prepared. Especially with how our climate has been trending lately.

Moving into the future, we have plans to become completely carbon neutral and buy back carbon offsets. This will be accomplished with solar and/or wind arrays on the roof of our building with larger battery arrays indoors to store power. Hopefully the grid is more green at that point so we don't need to power a datacenter off of Mr. Sun alone. It's certainly a pipe dream but I can't wait for the day that we don't have to burn altered carbon to sustain our business when mother nature comes knocking.

Dear reader, thanks for doing so.

Live well.